DRS is an automated vMotion, when DRS recognizes a imbalance in the resources used on one ESXi server in a cluster, it rebalances the VM's among those servers. DRS also handles where a VM should be powered on for the first time, its uses VMware HA software to perform this, HA is in charge of detecting any crashes and making sure that the VM is started on another node within the cluster, when the ESXI server is repaired DRS when then rebalance the load again across the whole cluster.

There are a number of new features in DRS

- Improved error reporting to help troubleshoot errors

- Enhanced vMotion compatibility, forcing your new servers to have backward compatibility with old servers

- Ability to relocate large swap files from shared and expensive storage to cheaper alternatives

When I first started using DRS I noticed that there was not an even number of VM's on each ESXI server within the cluster, this is not the intention of DRS, different VM's create different amounts of resource demands, its primary goal is to keep a balanced load across each ESXi server thus you may end up having more VM's on one ESXi server if their loads are not very heavy. DRS also wont keep moving VM's in order to keep the cluster perfectly balanced, only if the cluster becomes very unbalanced it will weigh up whether the penalty of vMotion is worth the performance gain. DRS is clever enough to try and separate the large VM's (more CPU and memory) onto different ESXi servers and to move the smaller VM's if the balance is not right. DRS does have a threshold of up to 60 vMotion's events per hour, it will check for imbalances in the cluster once every five minutes. VMware prevents "DRS storms" for example if a ESXI server crashes, DRS starts the VM's on other ESXi servers then see's a imbalance which then causes more vMotion events trying to rebalance the cluster, however this is prevented because DRS will wait at least five minutes before checking the cluster and it would only offer recommendations based on your migration threshold. This allows the administrator to control how aggressively DRS tries to rebalance the cluster.

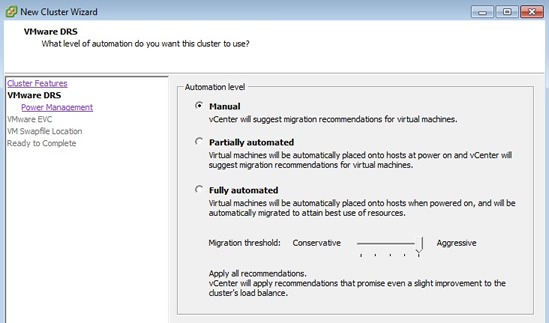

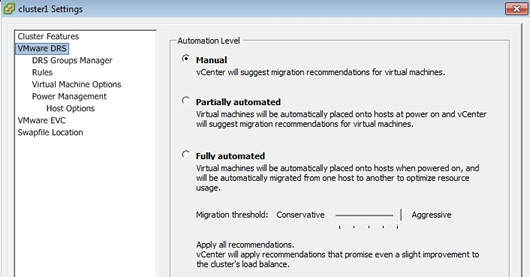

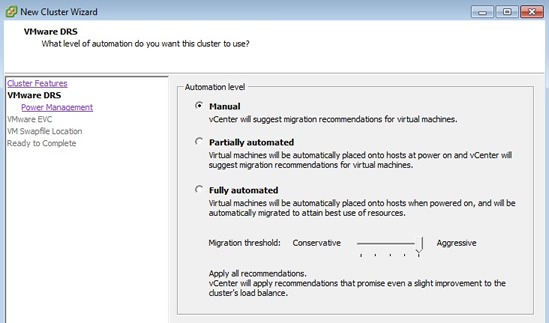

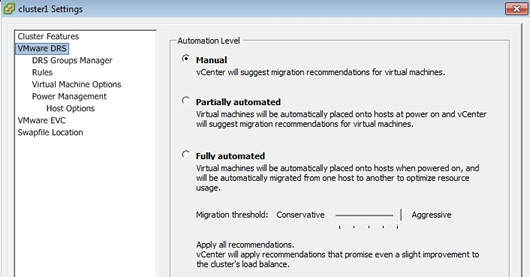

You can choose from three different levels of automation and also set a migration threshold, you can also have resource pools

| Manual |

The administrator is offered recommendations of where to place a VM and whether to vMotion a VM |

| Partially automated |

DRS decides where a VM will execute, the administrator is offered recommendations of whether to vMotion a VM |

| Fully automated (default) |

DRS decides where a VM will execute and whether to vMotion, based on a threshold parameter, obeying any rules or exclusions created by the administrator. |

Setting the DRS to manual or partial does not break the VMware HA, if a ESXi server fails, the VM gets powered on without asking where to power it on, you will be asked alter to rebalance the cluster. It all depends on what you require within your environment and SLA agreements that you have in place, some administrators prefer to select manual and have total control, this is OK if your environment is small, larger companies may go fully automated as they generally have large cluster environments and lots of spare capacity. You can exclude certain VM's from DRS if you so wish, there are many options available and permutations.

You can also set a migration threshold for DRS, this allows you to set how aggressive DRS should be in balancing the cluster, there are five threshold levels

| Level 1 |

Conservative |

Triggers a vMotion if the VM has a level five priority rating |

| Level 2 |

Moderately Conservative |

Triggers a vMotion if the VM has a level four or more priority rating |

| Level 3 |

Default |

Triggers a vMotion if the VM has a level three or more priority rating |

| Level 4 |

Moderately Aggressive |

Triggers a vMotion if the VM has a level two or more priority rating |

| Level 5 |

Aggressive |

Triggers a vMotion if the VM has a level one or more priority rating |

DRS automation levels allow you to specify a global rule for the cluster and you have have it on a VM basis, you can completely exclude VM from DRS (you might want to exclude cluster servers), you can also impose affinity and anti-affinity rules by making sure that VM are not on the same CPU or vSwitch.

Configuring DRS

Firstly you must make sure vMotion has been setup and tested, you really should check every VM and make sure it has no problems migration to any ESXI server within the cluster. I will cover the manual mode only as partial and automatic are hybrid of the manual anyway, I will also use the aggressive mode so that we can at least see some action.

Follow below to setup DRS in manual mode

| DRS configuration (manual mode) |

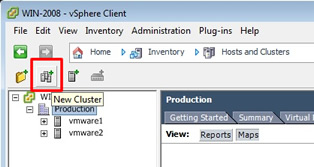

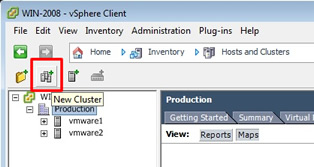

First we have to create a cluster by selecting the "new cluster" icon

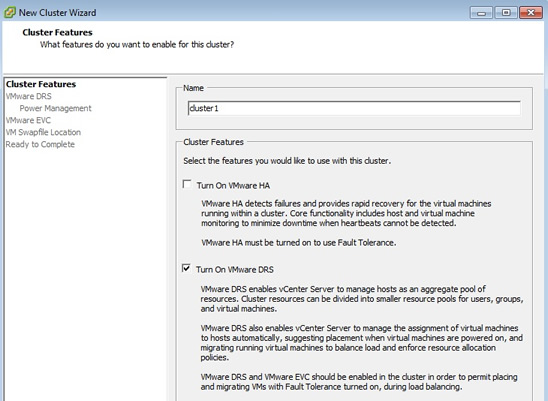

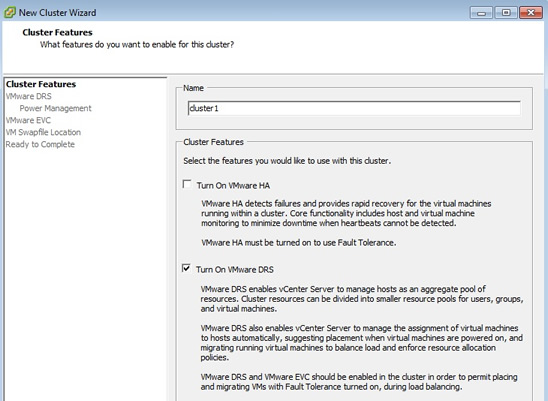

Enter the cluster name and select "Turn on VMware DRS" only, we will be covering HA later.

Now I select manual as I want full control

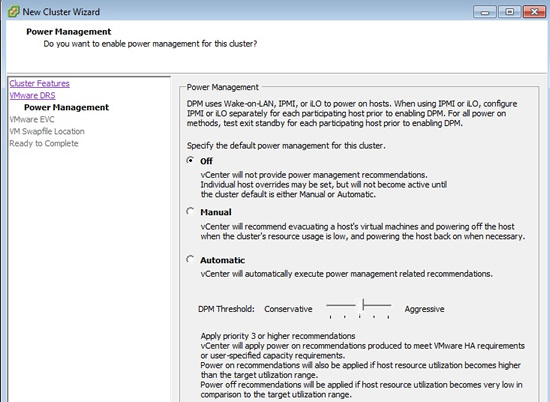

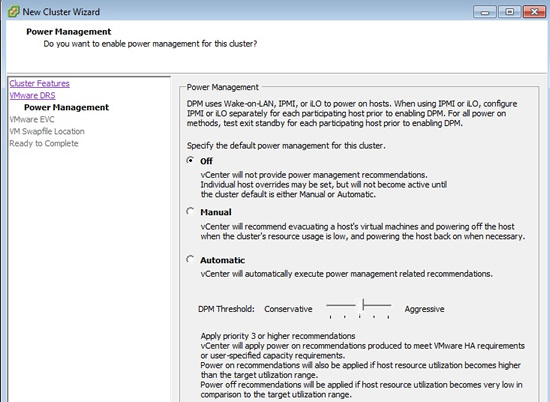

You can use power management features like wake-on-LAN or ILO, I select off for the time being as we will be covering power management later

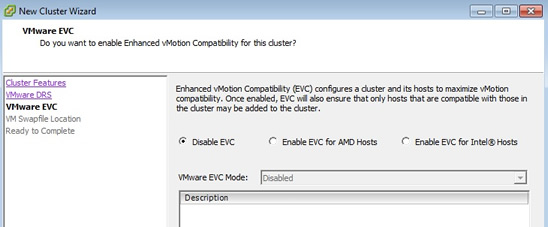

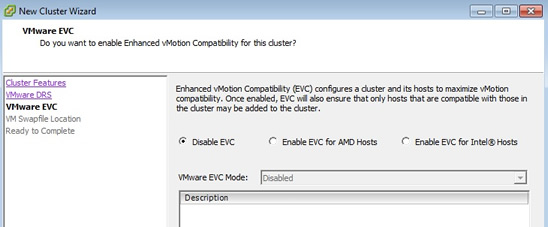

Again i will cover Enhanced vMotion compatibility later in this section, so disable it for the time being

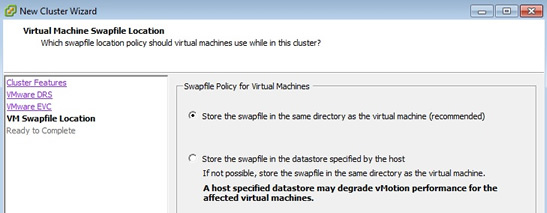

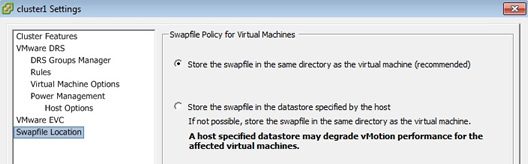

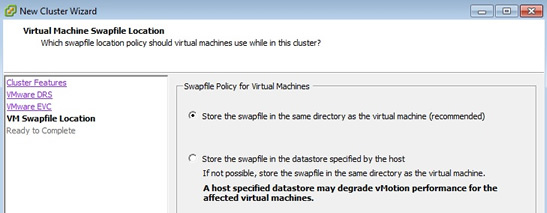

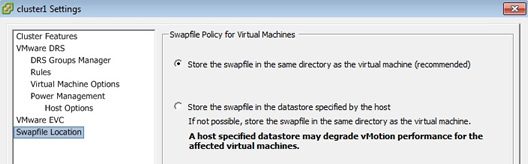

We will keep the VMKernel swap in the same directory as the VM

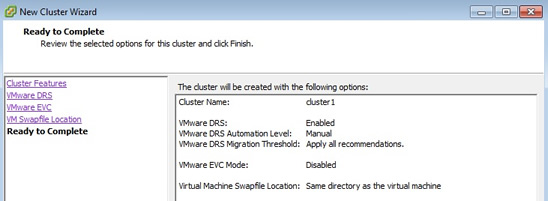

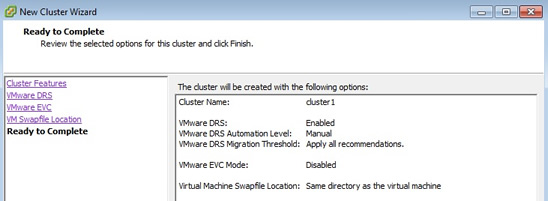

Finally we get to the summary screen

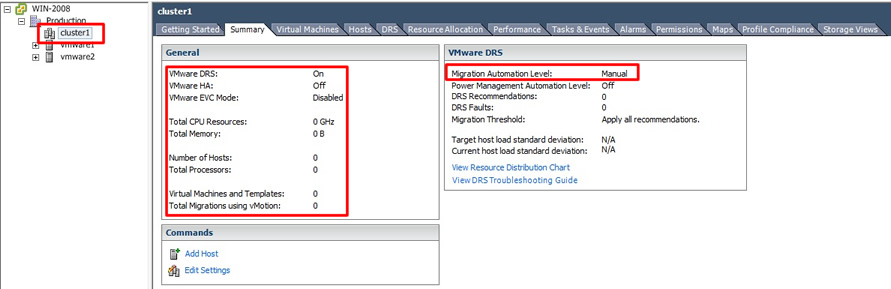

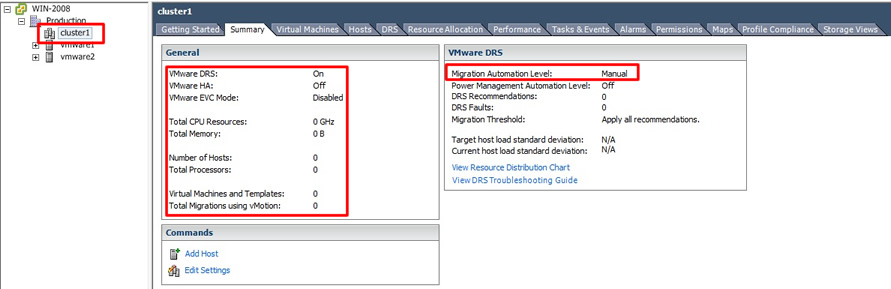

Before we start to add ESXi server to the cluster I just want you to see a few things, if you notice in the general panel DRS is on but we have no resources available as we have not added any ESXi servers to the cluster, also the VMware DRS panel is a bit sparse as well

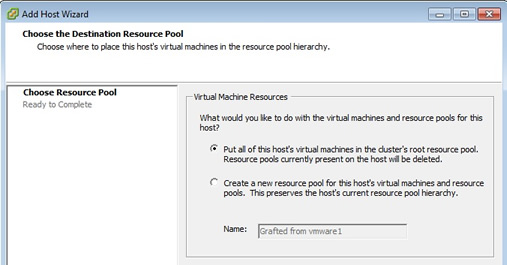

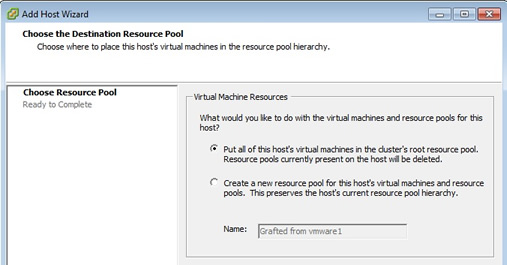

We can add a ESXI server to the cluster by simply dragging and dropping a ESXI server, when you do this the following screen appears, here I select to choose to use the root resource pool

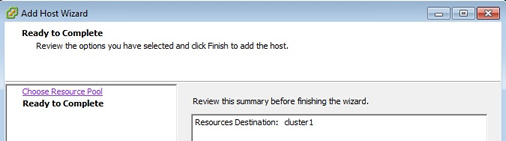

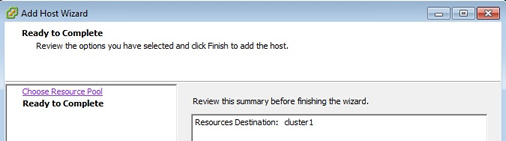

You then a summary screen

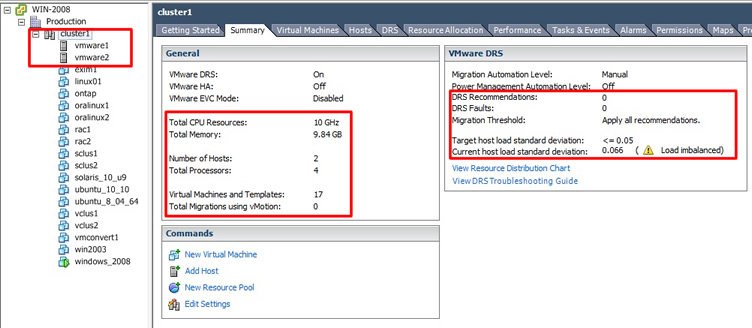

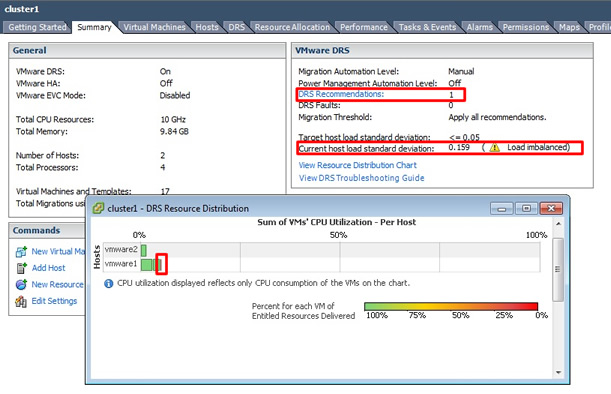

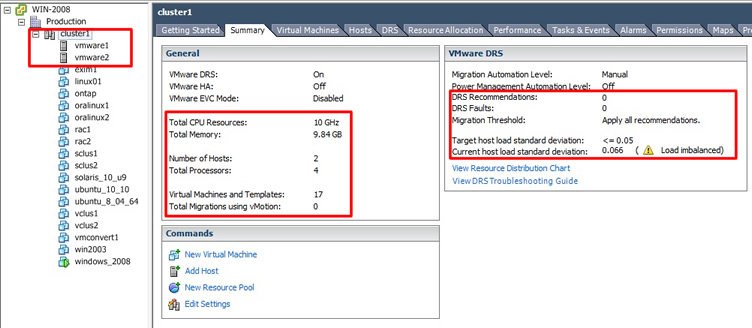

After adding both my ESXi servers in to the cluster we can clearly see the resources available increase, there are now 2 hosts, 4 CPU's and total memory and CPU resources available, also the DRS panel has come alive, there are no recommendations yet but that is because we have hardy any VM's powered on

|

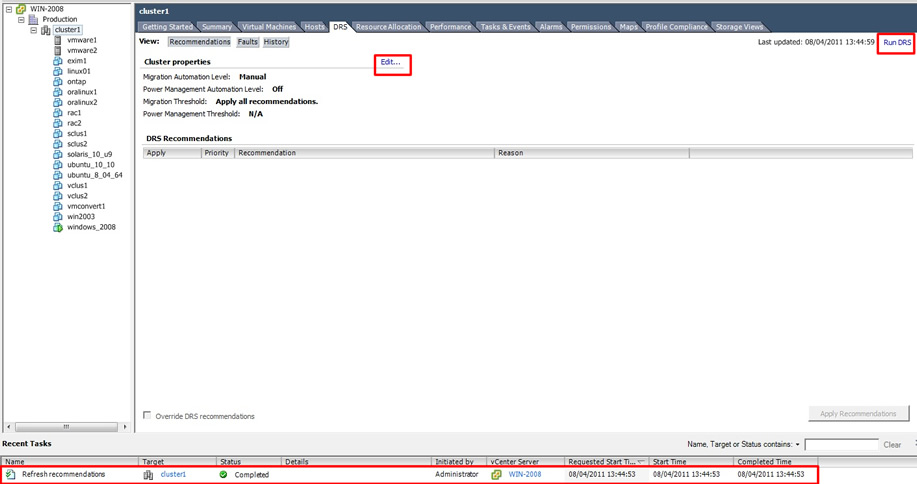

You can "run DRS" via the DRS tab in the cluster, see the top right-hand corner, you can see when it is completed in the "recent tasks" windows at the bottom, you can also edit the DRS configuration as see below, we will be going into much more detail later

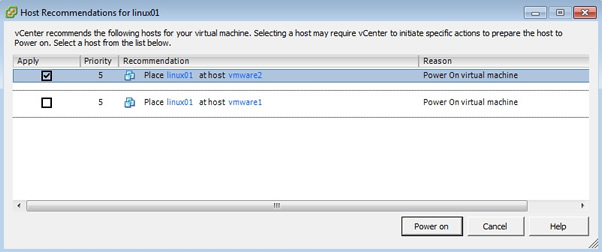

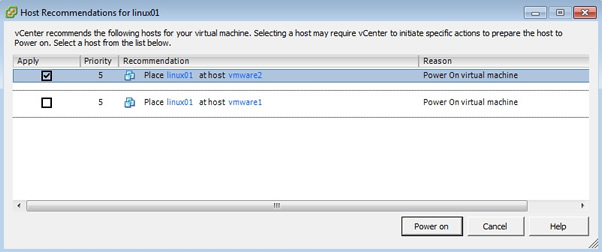

So lets get some recommendations going, if you look in the above screen shot I already have a VM running (windows_2008) on vmware1, so when I try to power on VM linux01, DRS kicks in and the recommendation screen appears, it already knows that I have a VM running on vmware1, so it suggests that i should power this on the other ESXi server (which has nothing running), I am going to ignore this and continue to power it on vmware1

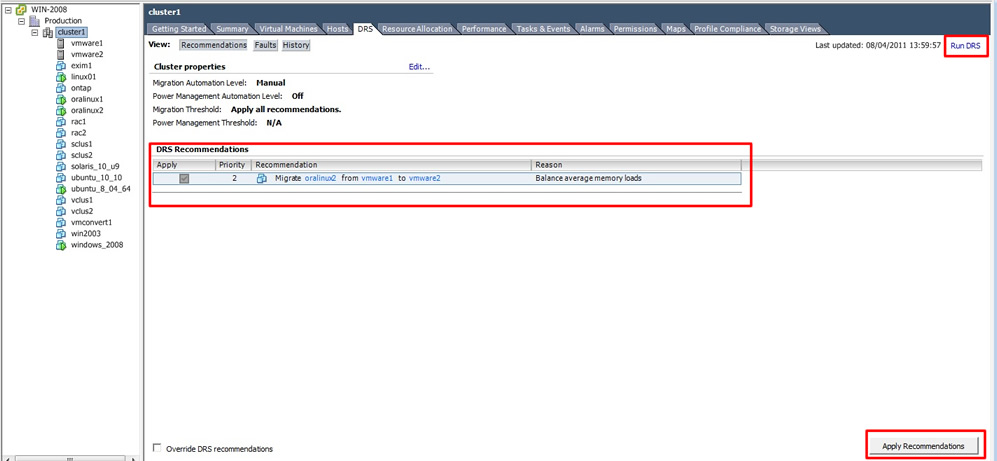

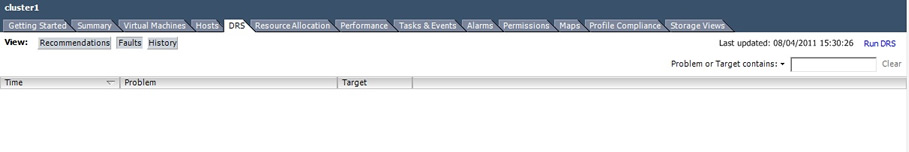

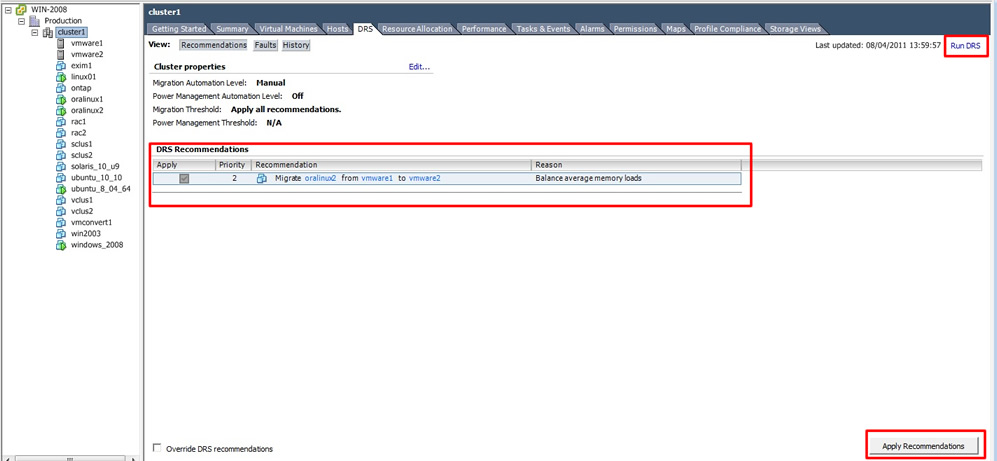

After I have powered on a number of VM's eventually when I "Run DRS", it produced the below recommendation, it see's that the cluster has become unbalanced and requests that I should move VM oralinux02 from vmware1 to vmware2, by selecting the "Apply Recommendations" button at the bottom right-hand corner, DRS will automatically move the VM for me, if I had automatic level turned on it would have perform this task without requesting me.

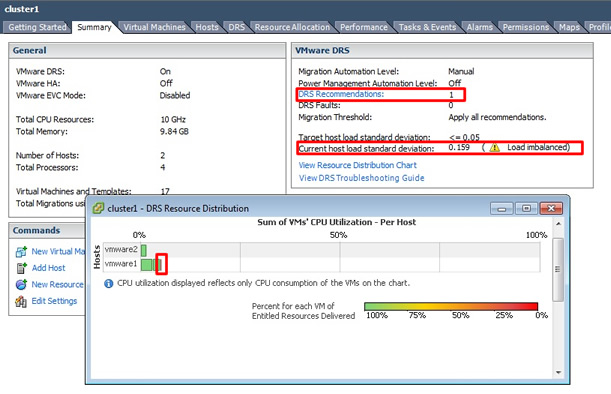

You can also see cluster imbalances from the summary screen on the cluster, if you look in the VMware DRS panel you can see 1 recommendation and a warning icon, if you click on the "View resource distribution chart" you can see that vmware1 does ever slow slightly has a red part on the graph, thus the warning and recommendations.

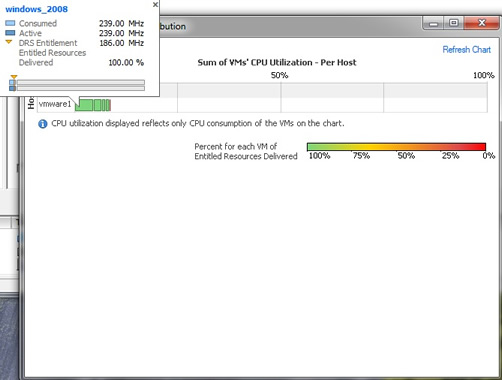

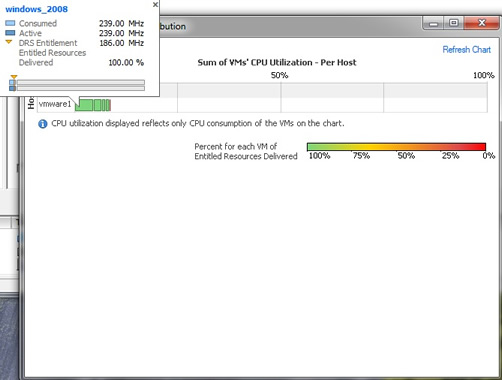

Each green box on the DRS resource distribution chart is a VM and it's size determines how much resources that it consumes, if you hoover over one of the green boxes (I have selected the largest green box), you get a more detailed looked on what resources the VM uses.

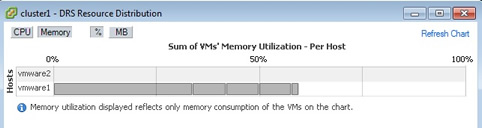

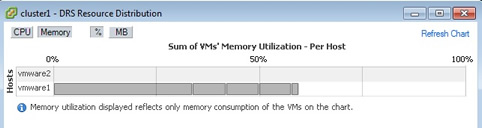

I then selected the memory tab, as you can see I am using more than 50% of the total memory on vmware1, thus the recommendation to migrate some VM's onto vmware2, I try to make the most of my hardware and generally try to keep a balance between the ESXi servers within the cluster.

DRS Options

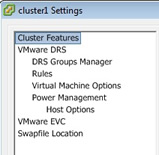

I am now going to cover the other options that are available to you

| DRS cluster affinity/Anti-Affinity rules |

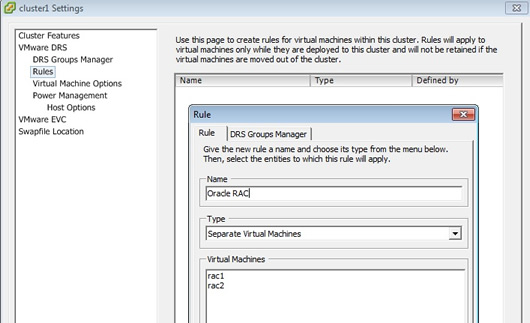

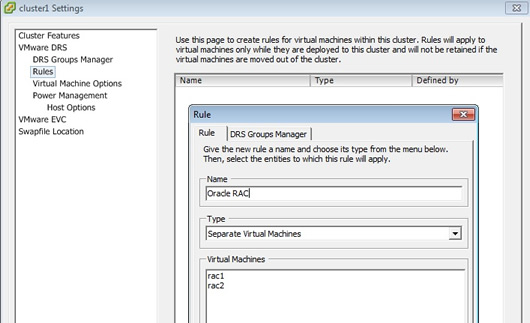

From the DRS tab select the "edit" tag, then select Rules, here I create a rule for my Oracle RAC, I do not want both my RAC nodes on the same ESXi server, so I select "Separate Virtual Machines" and add the two RAC nodes, remember that if I only had ESXi server it will start both nodes on that same server, but if another ESXi server is available it will recommend that I move one of the RAC nodes.

|

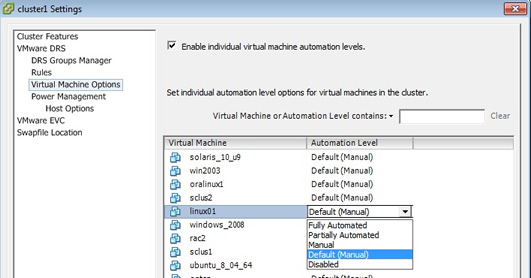

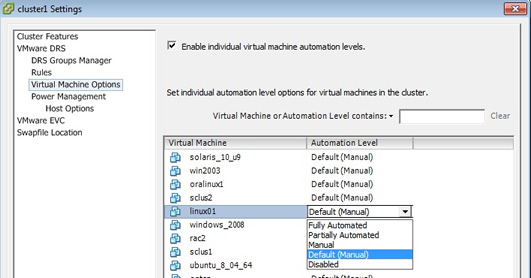

| Custom automation levels |

I did mention above that you can have individual settings for each VM, to change the automatic level of a VM just select the automatic level column and a drop down list will appear, then select one of the five options

|

| changing the DRS automation level |

We can change the automation level in the VMware DRS option

|

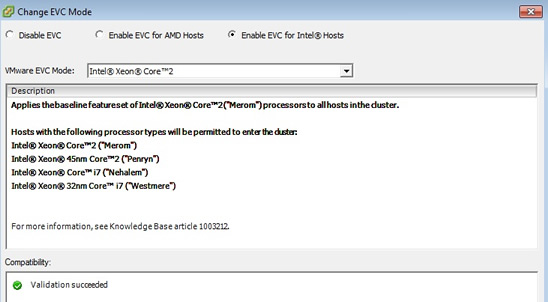

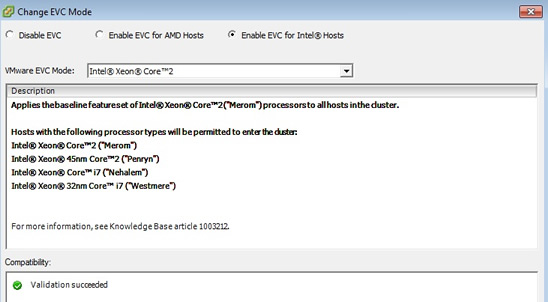

| enabling enhanced vMotion compatibility |

In an ideal world all the ESXi servers will be the same, but there are times when you want to upgrade, the Enhanced vMotion Compatibility (EVC) helps address CPU incompatibility issues. New CPU's are able to mask attributes of the processor, EVC allows them to use this feature to make them compatible with older ESX servers, Conceptually, ECV creates a common baseline of CPU attributes to engineer compatibility. To enable this feature you must turn off all your VM's because EVC generates CPU identity masks to engineer compatibility between hosts, it will also validate all you ESXI server hosts for compatibility as seen in the screen below. Vmware will be evolving this new feature to allow upgrades of all ESX servers using different CPU architectures.

|

| VMKernel swap file |

You can change the VMKernel swap file location, however take heed of the warning message when changing the location

|

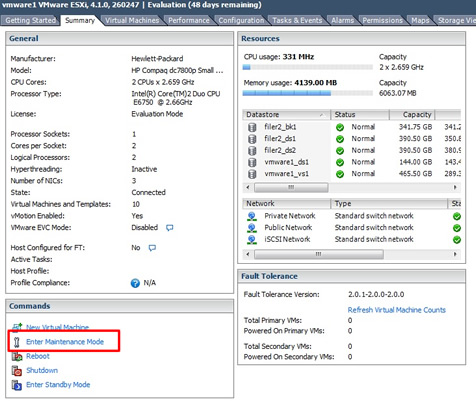

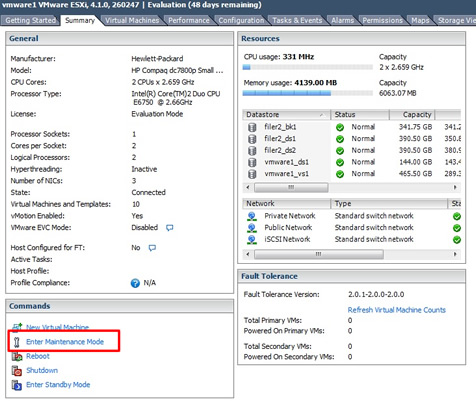

I just want to cover maintenance mode, you can select it on the summary page of a ESXi server, maintenance mode is an isolation state used whenever you need to carry out critical ESX server tasks such as upgrading firmware, ESXI servers, memory, CPU's or patching the server itself.

It will prevent other vCenter users from the following

- creating new VM's and powering them on

- prevent any vMotion events created by a administrator or automatic DRS events

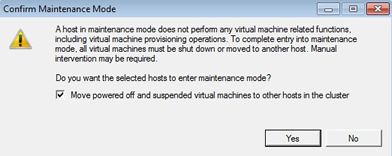

It will survive reboots of the server which allows administrators time to confirm that their changes have been effective before the VMs can be executed on the ESXI servers. When you turn on the maintenance mode if DRS is set to fully automatic mode, all the VM's will be moved automatically, you will be asked that you do want to go into maintenance mode and that you want to move the VM's as seen on the screen below

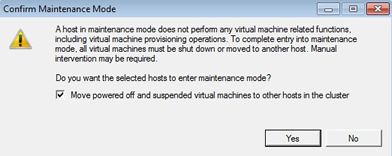

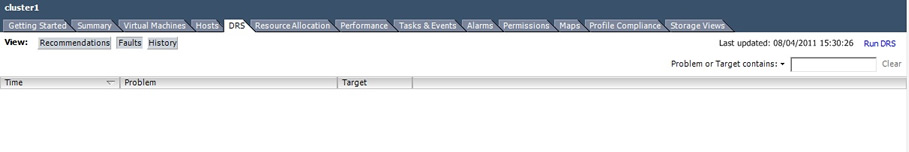

DRS Faults and History

If you select the DRS tab then the faults button, you get the screen below, from here you can see if there has been any problems regarding DRS, problems might be incompatibility problems, rules that have been violated, insufficient capacity to move VM, I don't have any faults but here is a screen shot of the faults page

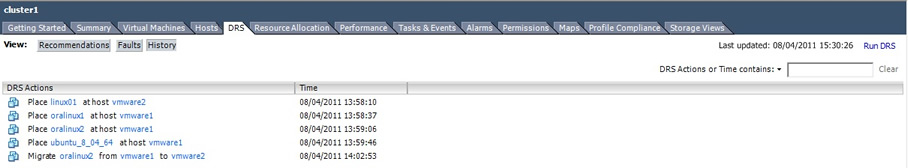

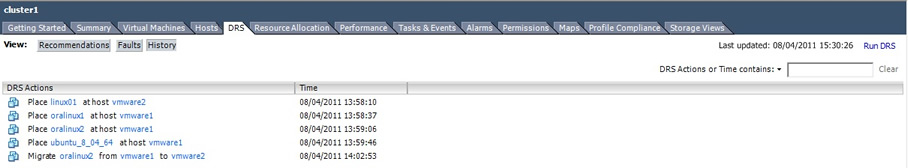

Lastly we come to the history, if you have setup a fully automatic DRS, you will not know where your VM's will be hosted, DRS could be moving from one day to the next, to keep track of what is going on there is a record information page that allow you to see all the DRS events, select the cluster then the DRS tab and finally select the history button, you can see from my history that some DRS events have occurred, remember that only 60 vMotion events can occur within one hour.